name)) when loading model weights hot 79 After 4 epochs, loss values are turned to nan.

This function takes the log of the prediction which diverges as the prediction approaches zero. I have found that the loss becomes NAN in attention, and simply use FP32 for attention will solve the problem. It is seq2seq, transformer model, using Adam optimizer, cross entropy criterion.

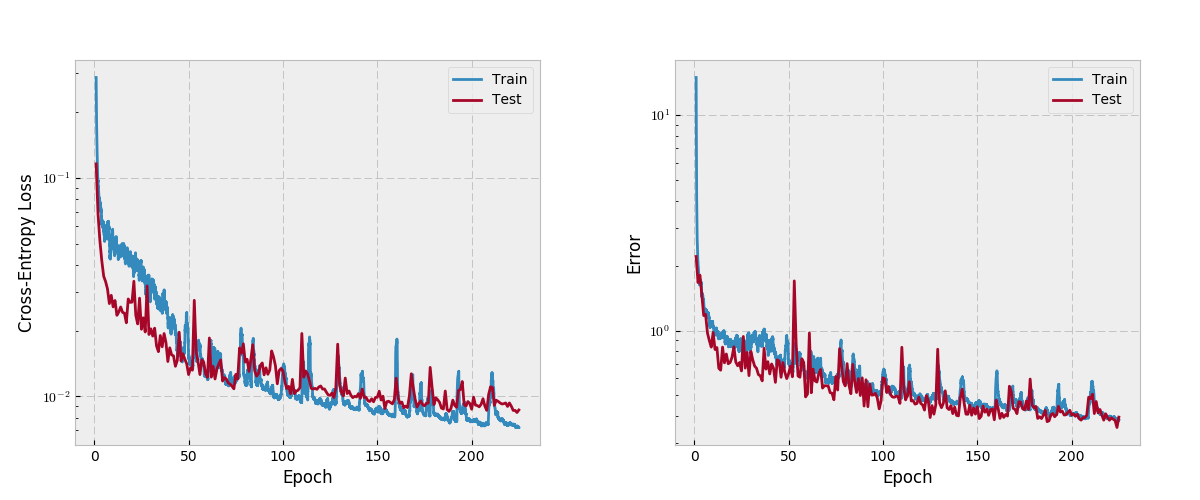

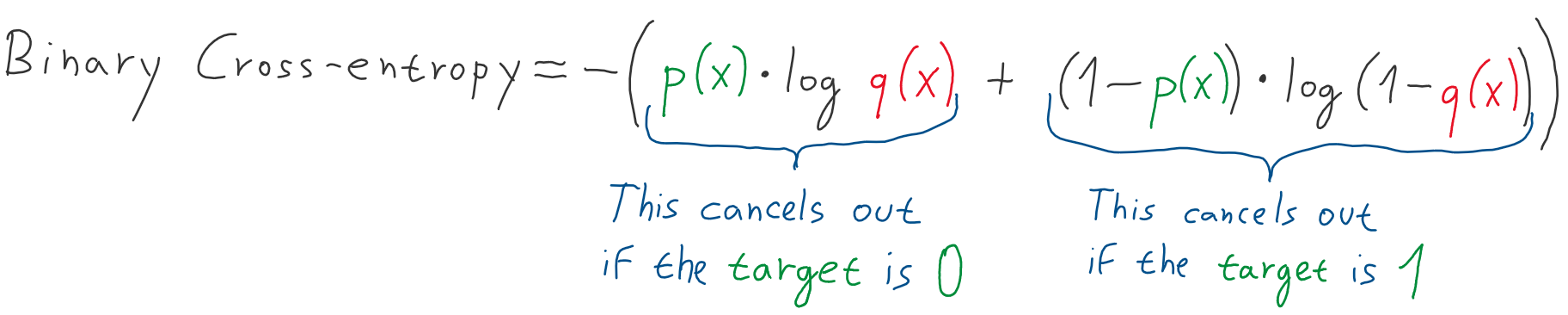

I assume that you have basic understanding of Neural Networks and Pytorch library. This is a continuation from Part 1 which you can find here. For y =1, the loss is as high as the value of x. The minimal required entries in the dictionary are (and shapes in brackets): * ``prediction`` (batch_size x n_decoder_time_steps x n_outputs or list thereof with each entry for a different target): re-scaled predictions that can be fed to After 4 epochs, loss values are turned to nan. I have sigmoid activation function in the output layer to squeeze output between 0 and 1, but maybe doesn't work properly. I am probably doing something stupid but I can't figure out. The ONNX model is parsed into a TensorRT model, serialized, loaded, and a context created and executed all successfully with no errors logged. target本身应该是能够被loss函数计算的,比如 I have a model, that uses gradient checkpointing and ddp. This is not the case in Photo by Antoine Dautry on Unsplash. If you suspect it's a bug in a framework then please isolate the part that concerns you and let us know. A loss function helps us interact with a model, tell it what we want - this is why we classify them as “objective functions”. You can choose any function that will fit your project, or create your own custom function. Hi, I'm trying to create a simple feed forward NN, but when computing the loss it is returning NaN. Weights start out as NaN (Pytorch) I am trying to build a regression model with 4 features and an output.

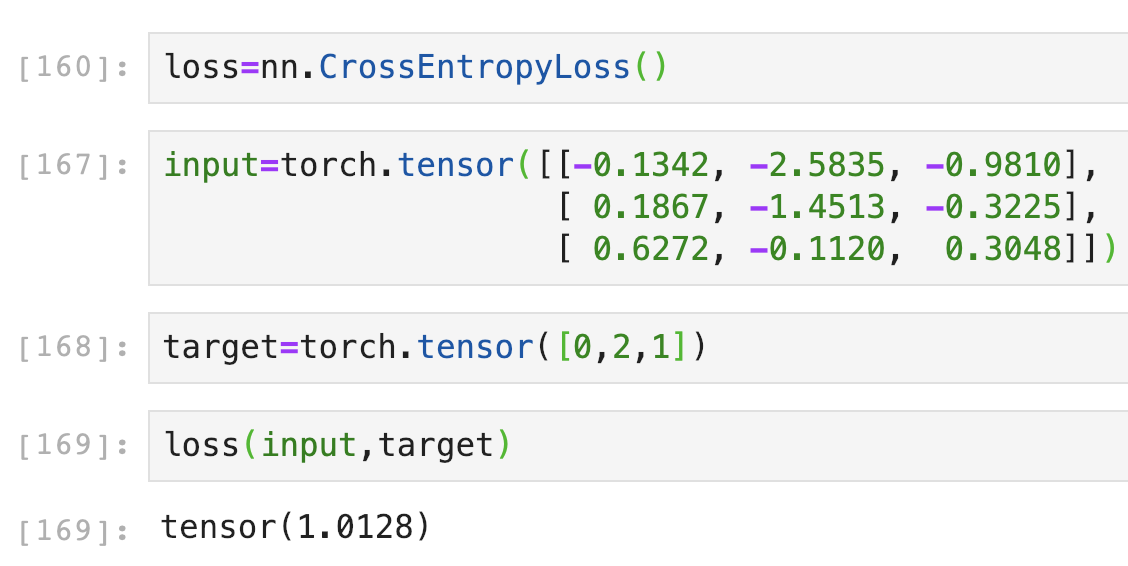

#CROSS ENTROPY LOSS PYTORCH HOW TO#

All the other code that we write is built around this- the exact specification of the model, how to fetch a batch of data and labels, computation of the loss and the details of the optimizer. x pytorch regex scikit-learn How to avoid loss = nan while training deep neural network using Caffe. Linear (5, 3) # Output layer has a size of 3 在pytorch训练过程中出现loss=nan的情况 1. The wrapped closure would be guaranteed to produce non-inf/nan gradients, so it could safely be passed along to self. Hopefully this article will serve as your quick start guide to using PyTorch loss functions in your machine learning tasks. tensor( for xx in range(100 Too high learning rate. A brief introduction to loss functions to help you decide what’s right for you. This article aims you to explain the role of loss function in neural network. Each of the variables train_batch, labels_batch, output_batch and loss is a PyTorch Variable and allows derivates to be automatically calculated.

By default, NaN`s are replaced with zero, positive infinity is replaced with the greatest finite value representable by sliedes commented on Jun 21, 2017. Fantashit Decem2 Comments on ctc loss get nan after some epochs in pytorch 1. I have a model, that uses gradient checkpointing and ddp. Any advice? Here is my architecture: class Net (nn. I'm implementing a neural network with Keras, but the Sequential model returns nan as loss value. > I'm using autocast with GradScaler to train on mixed precision. Steps to Drop Rows with NaN Values in Pandas DataFrame Step 1: Create a DataFrame with NaN Values. So, I wonder if there is a problem with my function. We went through the most common loss functions in PyTorch.

0 kommentar(er)

0 kommentar(er)